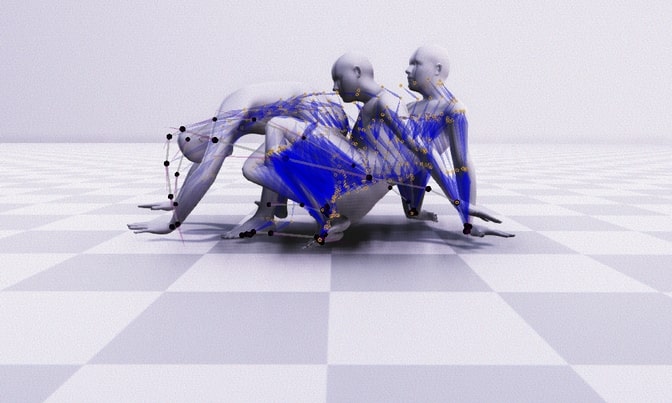

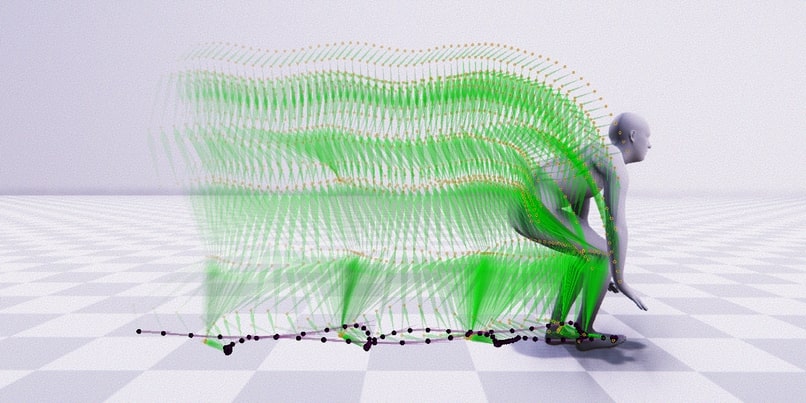

AGRoL is a novel diffusion based approach for the task of conditional motion synthesis.

With the recent popularity spike of AR/VR applications, realistic and accurate control of 3D full-body avatars is a highly demanded feature. A particular challenge is that only a sparse tracking signal is available from standalone HMDs (Head Mounted Devices) and it is often limited to tracking the user’s head and wrist. While this signal is resourceful for reconstructing the upper body motion, the lower body is not tracked and must be synthesized from the limited information provided by the upper body joints. In this paper, we present AGRoL, a novel conditional diffusion model specially purposed to track full bodies given sparse upper-body tracking signals. Our model uses a simple multi-layer perceptrons (MLP) architecture and a novel conditioning scheme for motion data. It can predict accurate and smooth full-body motion, especially the challenging lower body movement. Contrary to common diffusion architectures, our compact architecture can run in real-time, making it usable for online body-tracking applications. We train and evaluate our model on AMASS motion capture dataset, and show that our approach outperforms state-of-the-art methods in generated motion accuracy and smoothness. We further justify our design choices through extensive experiments and ablations.

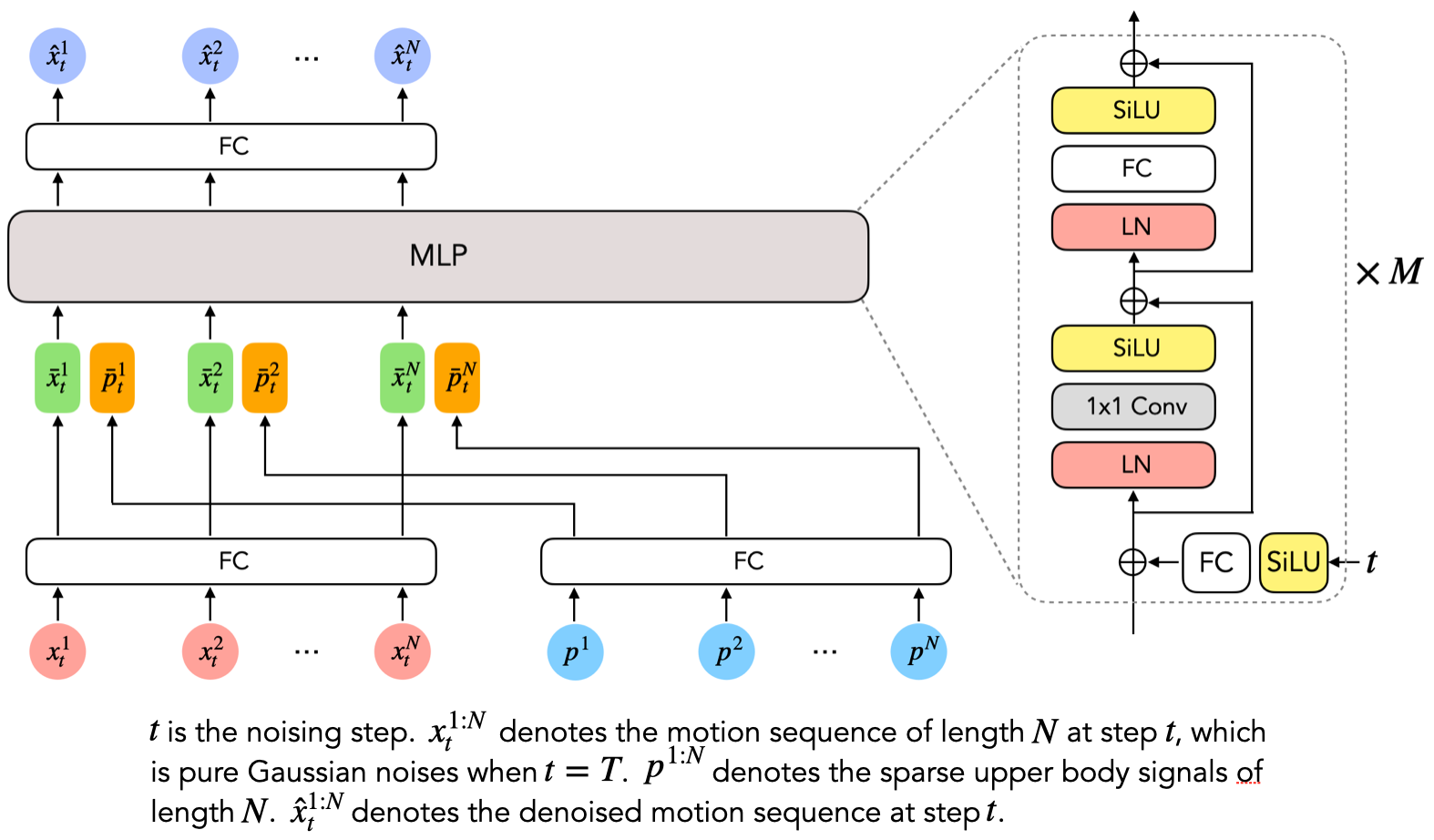

Our network is composed of only 4 types of components widely used in the deep learning era: fully connected layer (FC), SiLU activation layer, 1D convolutional layer with kernel size 1 and layer normalization(LN). Note that the 1D convolutional layer with kernel size 1 can be also seen as a fully connected layer operating on a different dimension. Initialized from gaussian noises, the motion sequence is fed to the network after combining with the upper body signals.

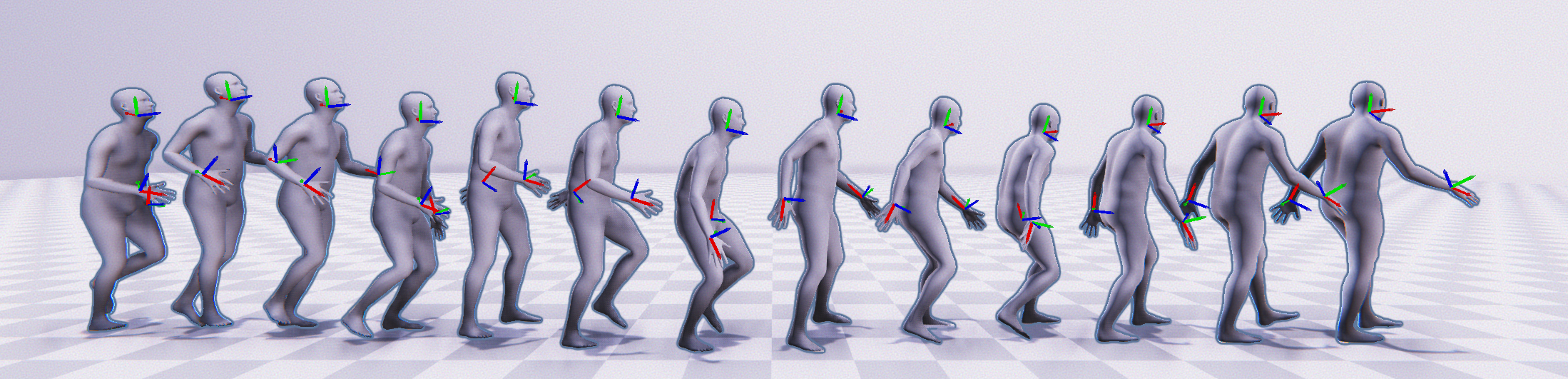

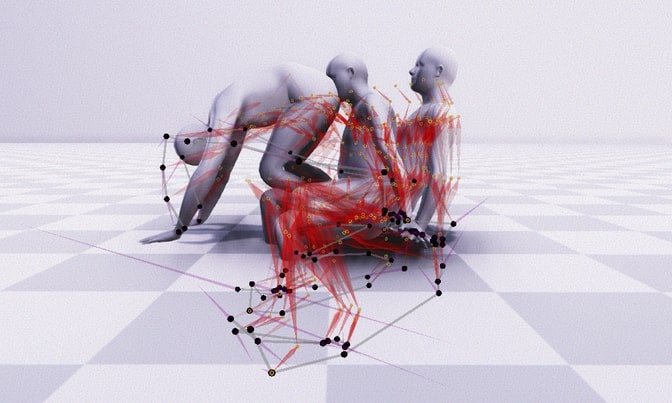

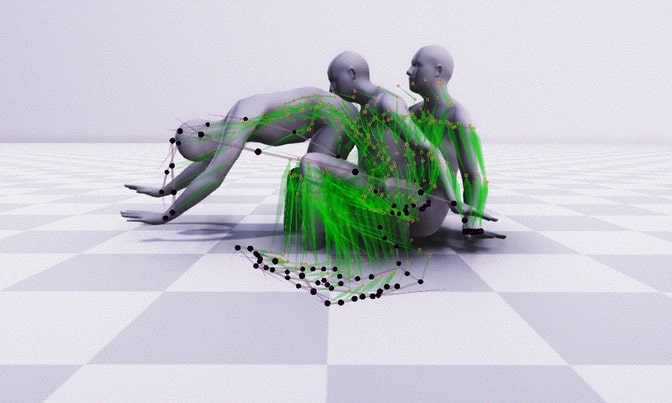

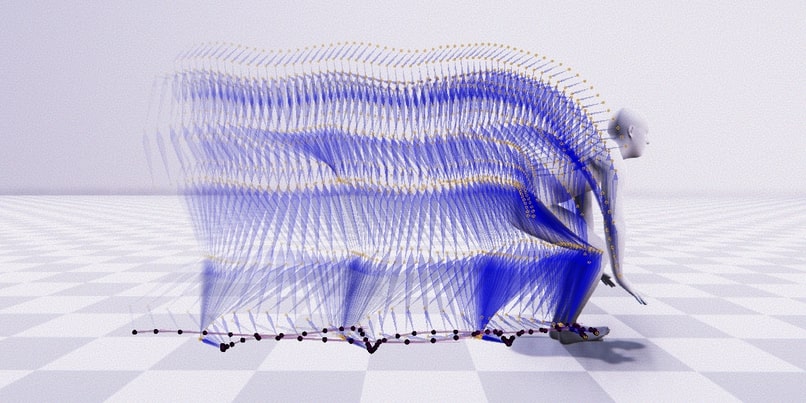

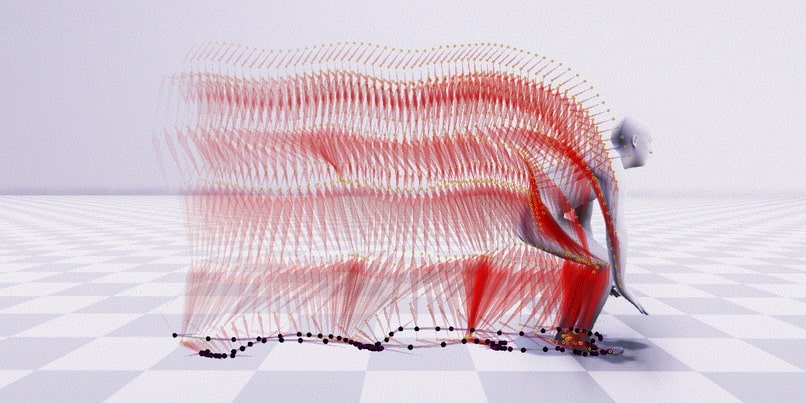

Here we show some comparison between AGRoL, AvatarPoser and Ground Truth.

| GT | AvatarPoser | AGRoL | GT | AvatarPoser | AGRoL | |

|---|---|---|---|---|---|---|

|

|

|

|

| AGRoL |

|---|

|

| GT | AvatarPoser | AGRoL |

|---|---|---|

|

|

|

|

|

|

@inproceedings{du2023agrol,

author = {Du, Yuming and Kips, Robin and Pumarola, Albert and Starke, Sebastian and Thabet, Ali and Sanakoyeu, Artsiom},

title = {Avatars Grow Legs: Generating Smooth Human Motion from Sparse Tracking Inputs with Diffusion Model},

booktitle = {CVPR},

year = {2023},

}